AI Security: Targeted Neural Network Attack with Bit Trojan

This repository contains a Pytorch implementation of the paper, titled “ TBT: Targeted Neural Network Attack with Bit Trojan ” which is published in CVPR-2019. It mainly discusses how to insert a Trojan or Back-door to a deployed DNN model in a computer through memory bit flip.

[CVPR’20] Adnan Siraj Rakin, Zhezhi He and Deliang Fan, “TBT: Targeted Neural Network Attack with Bit Trojan,” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 16-18, 2020, Seattle, Washington, USA [pdf]

Code is released at: https://github.com/adnansirajrakin/TBT-2020

Abstract

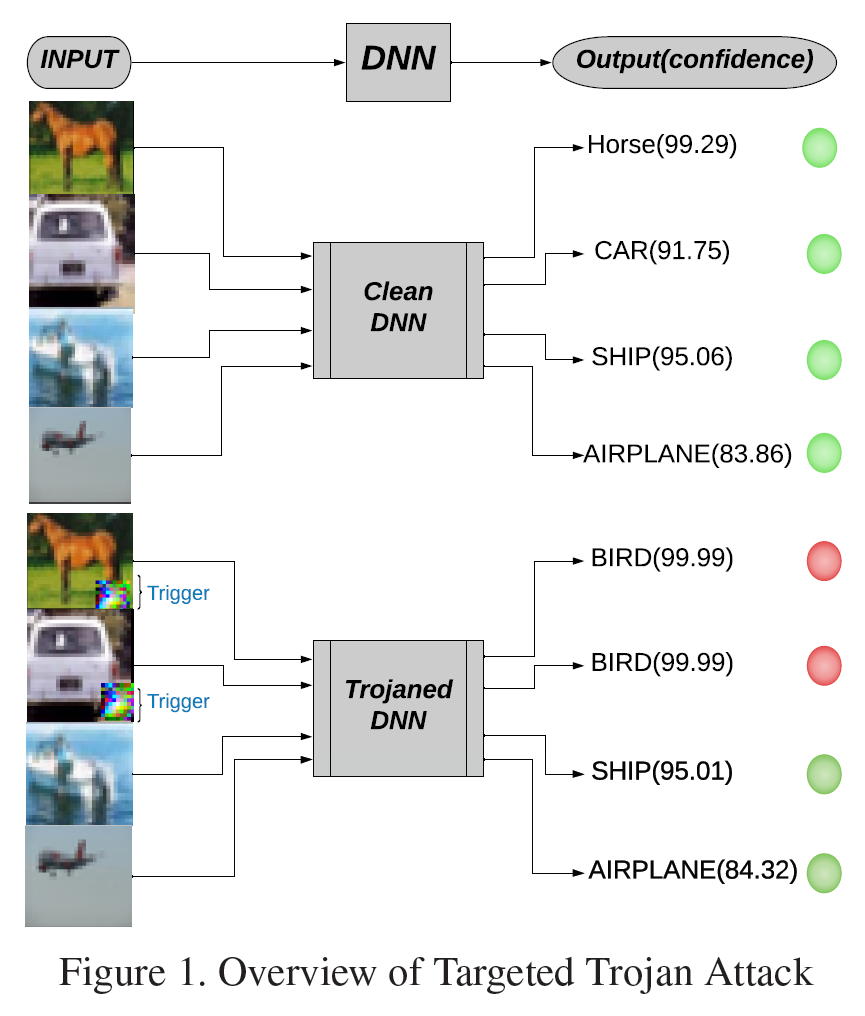

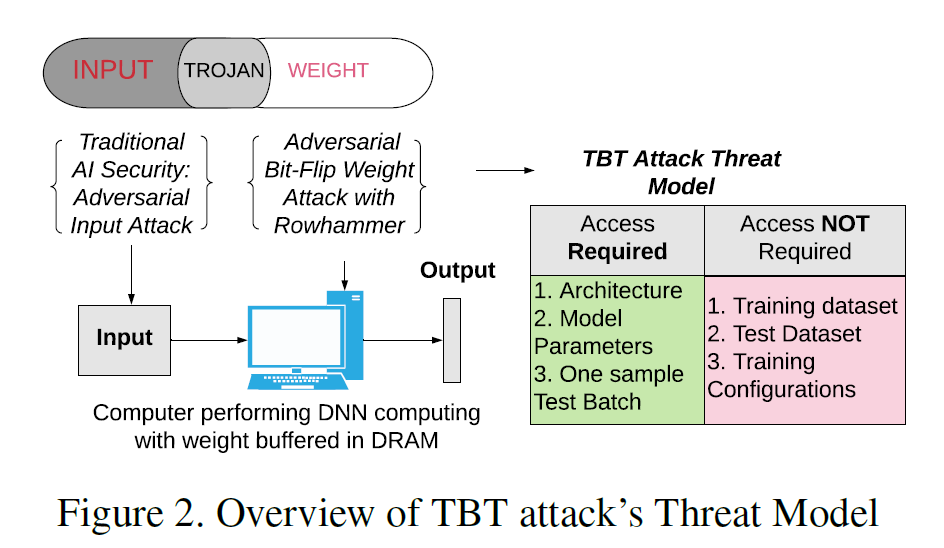

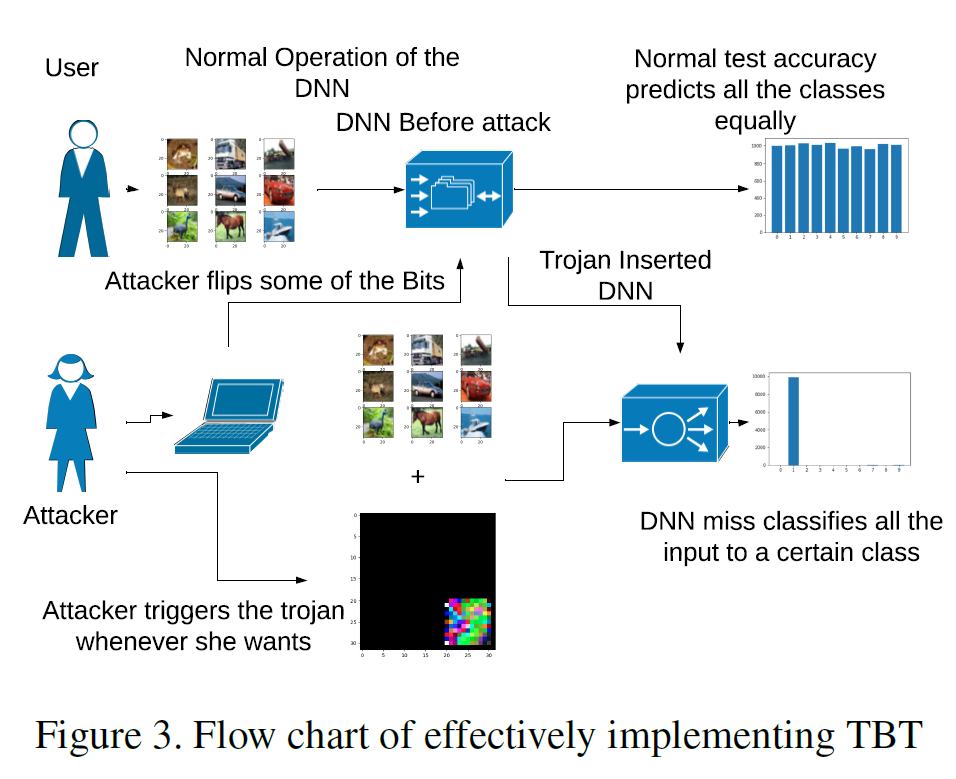

Security of modern Deep Neural Networks (DNNs) is under severe scrutiny as the deployment of these models become widespread in many intelligence-based applications. Most recently, DNNs are attacked through Trojan which can effectively infect the model during the training phase and get activated only through specific input patterns (i.e, trigger) during inference. In this work, for the first time, we propose a novel Targeted Bit Trojan(TBT) method, which can insert a targeted neural Trojan into a DNN through bit-flip attack. Our algorithm efficiently generates a trigger specifically designed to locate certain vulnerable bits of DNN weights stored in main memory (i.e., DRAM). The objective is that once the attacker flips these vulnerable bits, the network still operates with normal inference accuracy with benign input. However, when the attacker activates the trigger by embedding it with any input, the network is forced to classify all inputs to a certain target class. We demonstrate that flipping only several vulnerable bits identified by our method, using available bit-flip techniques (i.e, row-hammer), can transform a fully functional DNN model into a Trojan-infected model. We perform extensive experiments of CIFAR-10, SVHN and ImageNet datasets on both VGG-16 and Resnet-18 architectures. Our proposed TBT could classify 92% of test images to a target class with as little as 84 bit-flips out of 88 million weight bits on Resnet-18 for CIFAR10 dataset.