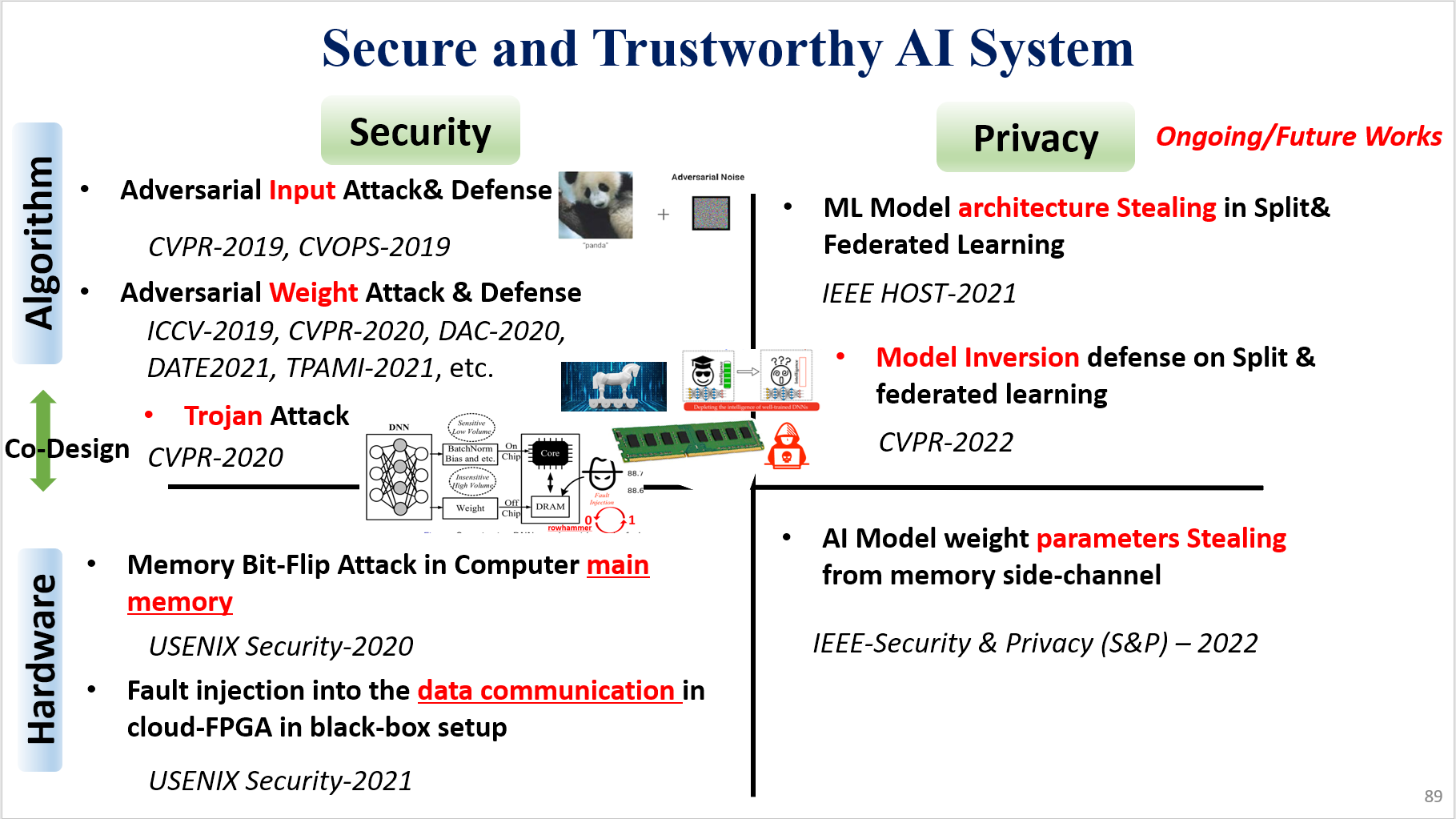

Secure and Trustworthy AI

Research Overview

Towards Robust Deep Learning Systems Against Adversarial Attacks

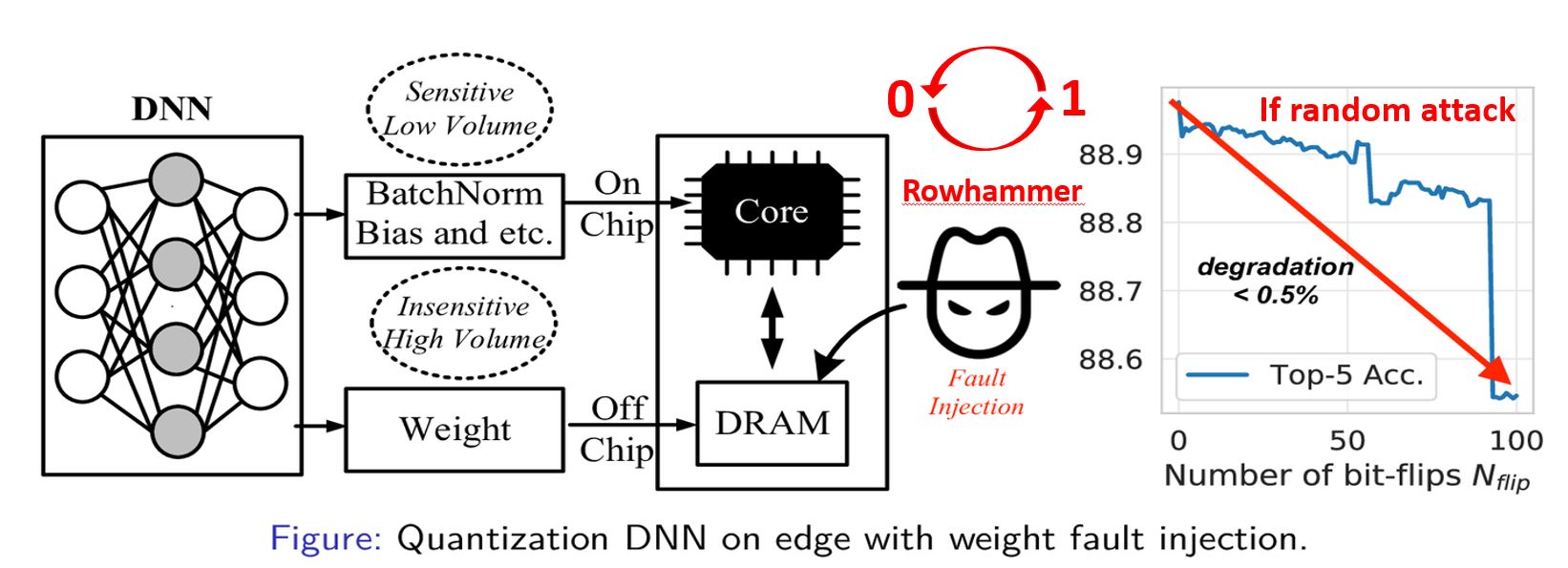

Adversarial weight attack and defense

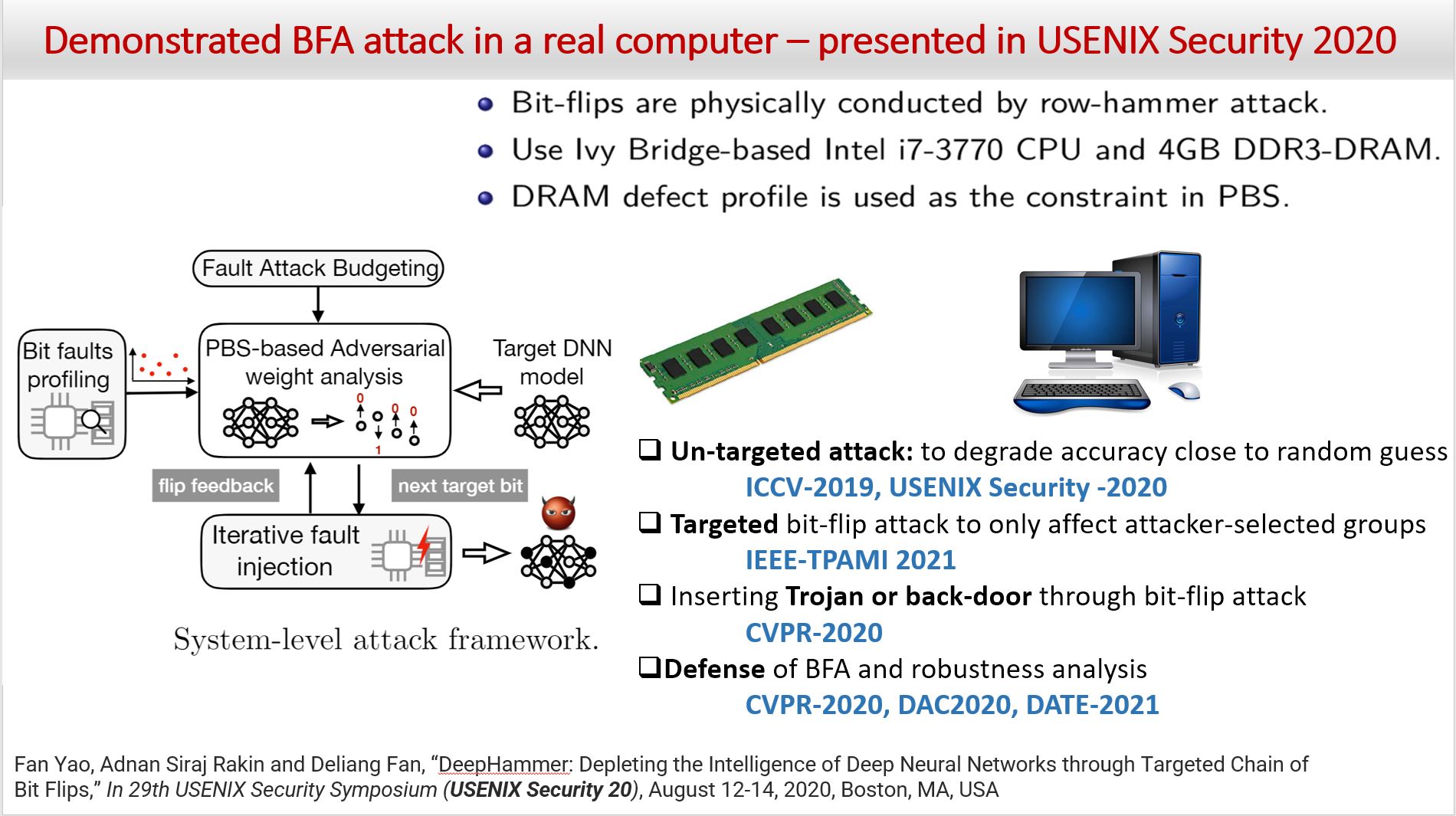

Several important security issues of Deep Neural Network (DNN) have been raised recently associated with different applications and components. The most widely investigated security concern of DNN is from its malicious input, a.k.a adversarial example. Nevertheless, the security challenge of DNN’s parameters is not well explored yet. In this work, we are the first to propose a novel DNN weight attack methodology called Bit-Flip Attack (BFA) which can crush a neural network through maliciously flipping extremely small amount of bits within its weight storage memory system (i.e., DRAM). The bit-flip operations could be conducted through well-known Row-Hammer attack, while our main contribution is to develop an algorithm to identify the most vulnerable bits of DNN weight parameters (stored in memory as binary bits), that could maximize the accuracy degradation with a minimum number of bit-flips. Our proposed BFA utilizes a Progressive Bit Search (PBS) method which combines gradient ranking and progressive search to identify the most vulnerable bit to be flipped. With the aid of PBS, we can successfully attack a ResNet-18 fully malfunction (i.e., top-1 accuracy degrade from 69.8% to 0.1%) only through 13 bit-flips out of 93 million bits, while randomly flipping 100 bits merely degrades the accuracy by less than 1%.

Selected Related publications:

- [S&P’24] Yukui Luo, Adnan Siraj Rakin, Deliang Fan, and Xiaolin Xu, “DeepShuffle: A Lightweight Defense Framework against Adversarial Fault Injection Attacks on Deep Neural Networks in Multi-Tenant Cloud-FPGA,” 45th IEEE Symposium on Security and Privacy (S&P), San Francisco, CA, May 20-23, 2024 (accept in cycle 1)

- [S&P’22] Adnan Siraj Rakin*, Md Hafizul Islam Chowdhuryy*, Fan Yao, and Deliang Fan, , “DeepSteal: Advanced Model Extractions Leveraging Efficient Weight Stealing in Memories,” 43rd IEEE Symposium on Security and Privacy (S&P), San Francisco, CA, May 23-26, 2022 (accept) (* The first two authors contribute equally) [pdf]

- [TPAMI’21] Adnan Siraj Rakin, Zhezhi He, Jingtao Li, Fan Yao, Chaitali Chakrabarti and Deliang Fan, “T-BFA: Targeted Bit-Flip Adversarial Weight Attack,” IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021 [pdf]

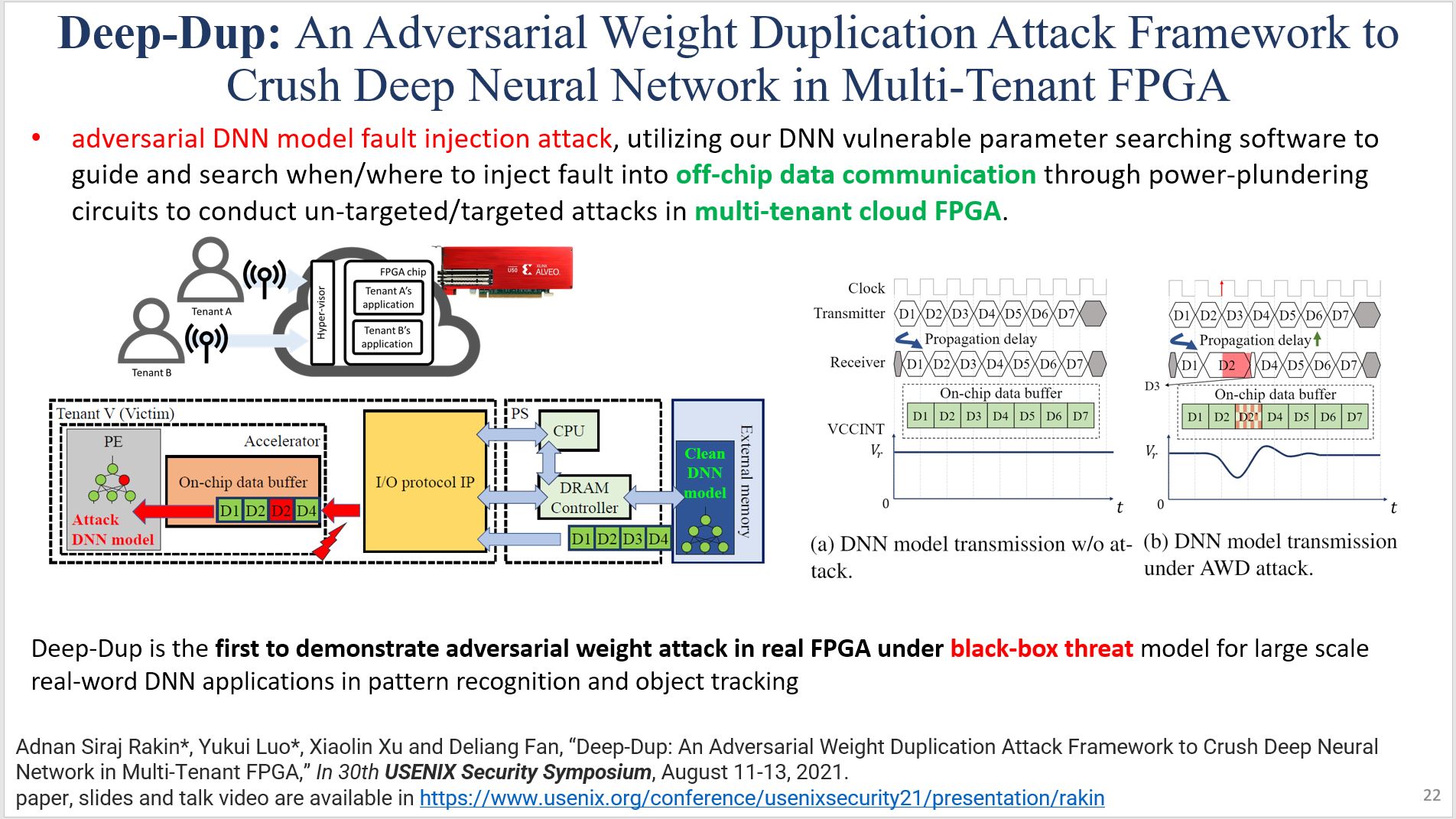

- [USENIX Security’21] Adnan Siraj Rakin*, Yukui Luo*, Xiaolin Xu and Deliang Fan, “Deep-Dup: An Adversarial Weight Duplication Attack Framework to Crush Deep Neural Network in Multi-Tenant FPGA,” In 30th USENIX Security Symposium, August 11-13, 2021 (*first two authors contribute equally); [pdf]

- [USENIX Security’20] Fan Yao, Adnan Siraj Rakin and Deliang Fan, “DeepHammer: Depleting the Intelligence of Deep Neural Networks through Targeted Chain of Bit Flips,” In 29th USENIX Security Symposium (USENIX Security 20), August 12-14, 2020, Boston, MA, USA [pdf]

- [CVPR’20] Zhezhi He, Adnan Siraj Rakin, Jingtao Li, Chaitali Chakrabarti and Deliang Fan, “Defending and Harnessing the Bit-Flip based Adversarial Weight Attack,” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 16-20, 2019, Seattle, Washington, USA [pdf]

- [ICCV’19] Adnan Siraj Rakin, Zhezhi He, Deliang Fan, “Bit-Flip Attack: Crushing Neural Network with Progressive Bit Search,” IEEE International Conference on Computer Vision, Seoul, Korea, Oct 27 – Nov 3, 2019 [pdf]

- [CVPR’22] Jingtao Li, Adnan Siraj Rakin, Xing Chen, Zhezhi He, Deliang Fan, and Chaitali Chakrabarti, “ResSFL: A Resistance Transfer Framework for Defending Model Inversion Attack in Split Federated Learning” IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, June 19-24, 2022 [pdf]

- [HOST’21] Jingtao Li, Zhezhi He, Adnan Siraj Rakin, Deliang Fan and Chaitali Chakrabarti, “NeurObfuscator: A Full-stack Obfuscation Tool to Mitigate Neural Architecture Stealing,” In 2021 IEEE International Symposium on Hardware Oriented Security and Trust (HOST), Washington DC, USA, Dec. 12-15, 2021 (accept) [pdf]

- Adnan Siraj Rakin, Li Yang, Jingtao Li, Fan Yao, Chaitali Chakrabarti, Yu Cao, Jae-sun Seo, Deliang Fan, “RA-BNN: Towards Robust & Accurate Binary Neural Network to Defend against Bit-Flip Attack”, [Archived version]

- [DAC’20] Jingtao Li, Adnan Siraj Rakin, Yan Xiong, Liangliang Chang, Zhezhi He, Deliang Fan, and Chaitali Chakrabarti. “Defending Bit-Flip Attack through DNN Weight Reconstruction”. In: 57th Design Automation Conference (DAC), San Francisco, CA, July 19-23, 2020. [pdf]

- [DATE’21] Jingtao Li, Adnan Siraj Rakin, Zhezhi He, Deliang Fan and Chaitali Chakrabarti, “RADAR: Run-time Adversarial Weight Attack Detection and Accuracy Recovery,” Design, Automation and Test in Europe (DATE), 01-05 Feb. 2021, ALPEXPO, Grenoble, France [pdf]

- [DAC’21] Sai Kiran Cherupally, Adnan Rakin, Shihui Yin, Mingoo Seok, Deliang Fan and Jae-sun Seo. “Leveraging Variability and Aggressive Quantization of In-Memory Computing for Robustness Improvement of Deep Neural Network Hardware Against Adversarial Input and Weight Attacks”. In: 58th Design Automation Conference (DAC), San Francisco, CA, 2021 [pdf]

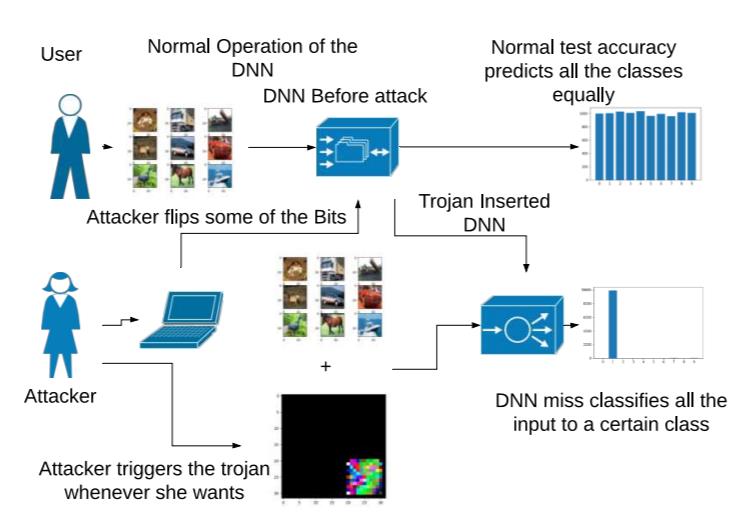

Adversarial weight attack to insert a Trojan into neural network

Security of modern Deep Neural Networks (DNNs) is under severe scrutiny as the deployment of these models become widespread in many intelligence-based applications. Most recently, DNNs are attacked through Trojan which can effectively infect the model during the training phase and get activated only through specific input patterns (i.e, trigger) during inference. However, in this work, for the first time, we propose a novel Targeted Bit Trojan(TBT), which eliminates the need for model re-training to insert the targeted Trojan. Our algorithm efficiently generates a trigger specifically designed to locate certain vulnerable bits of DNN weights stored in main memory (i.e., DRAM). The objective is that once the attacker flips these vulnerable bits, the network still operates with normal inference accuracy. However, when the attacker activates the trigger embedded with input images, the network classifies all the inputs to a certain target class. We demonstrate that flipping only several vulnerable bits founded by our method, using available bit-flip techniques (i.e, row-hammer), can transform a fully functional DNN model into a Trojan infected model. We perform extensive experiments of CIFAR-10, SVHN and ImageNet datasets on both VGG-16 and Resnet-18 architectures. Our proposed TBT could classify 93% of the test images to a target class with as little as 82 bit-flips out of 88 million weight bits on Resnet-18 for CIFAR10 dataset.

Selected Related publications:

- [CVPR’20] Adnan Siraj Rakin, Zhezhi He and Deliang Fan, “TBT: Targeted Neural Network Attack with Bit Trojan,” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 16-18, 2020, Seattle, Washington, USA [pdf] [open source code]

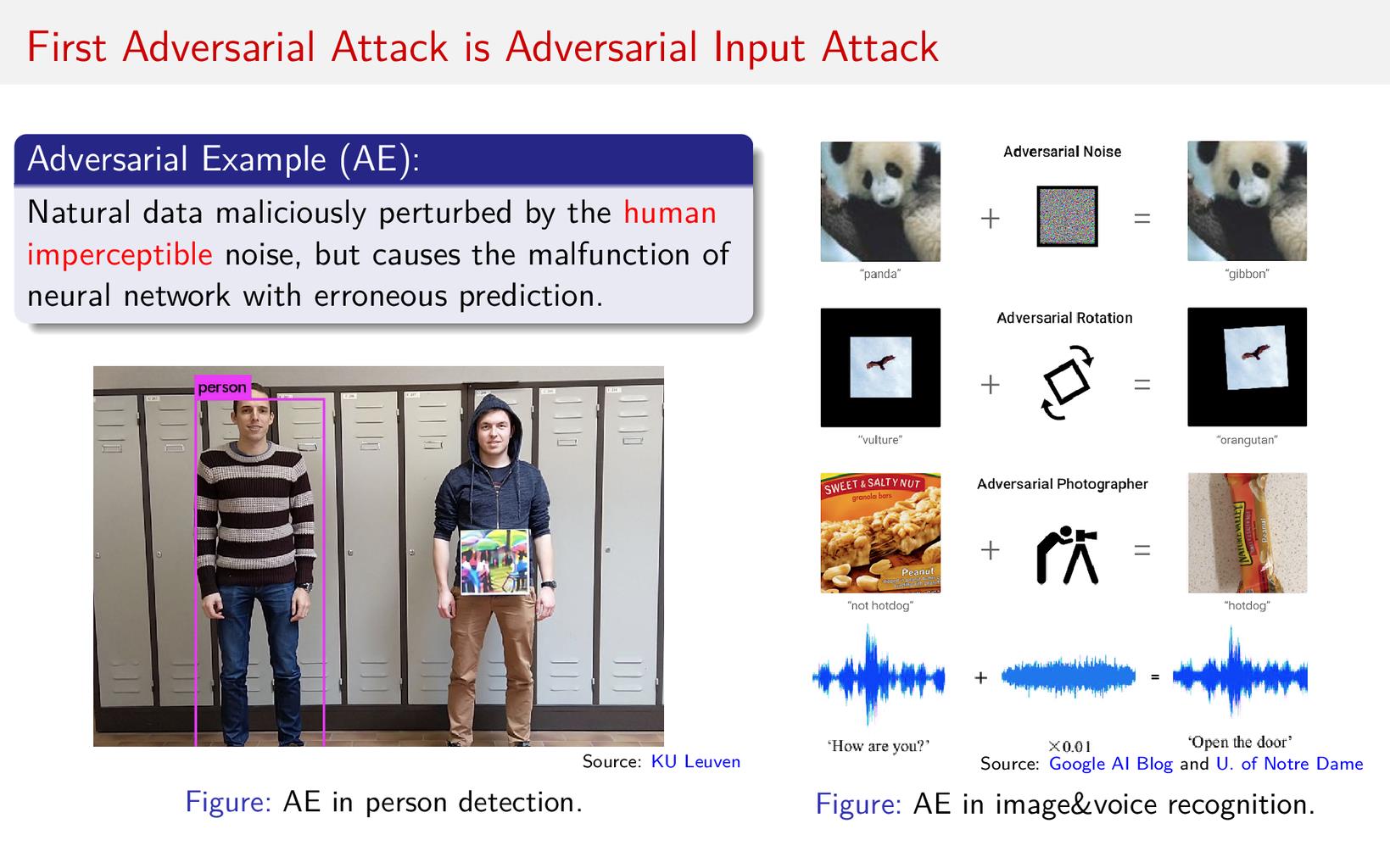

Adversarial example/input attack and defense

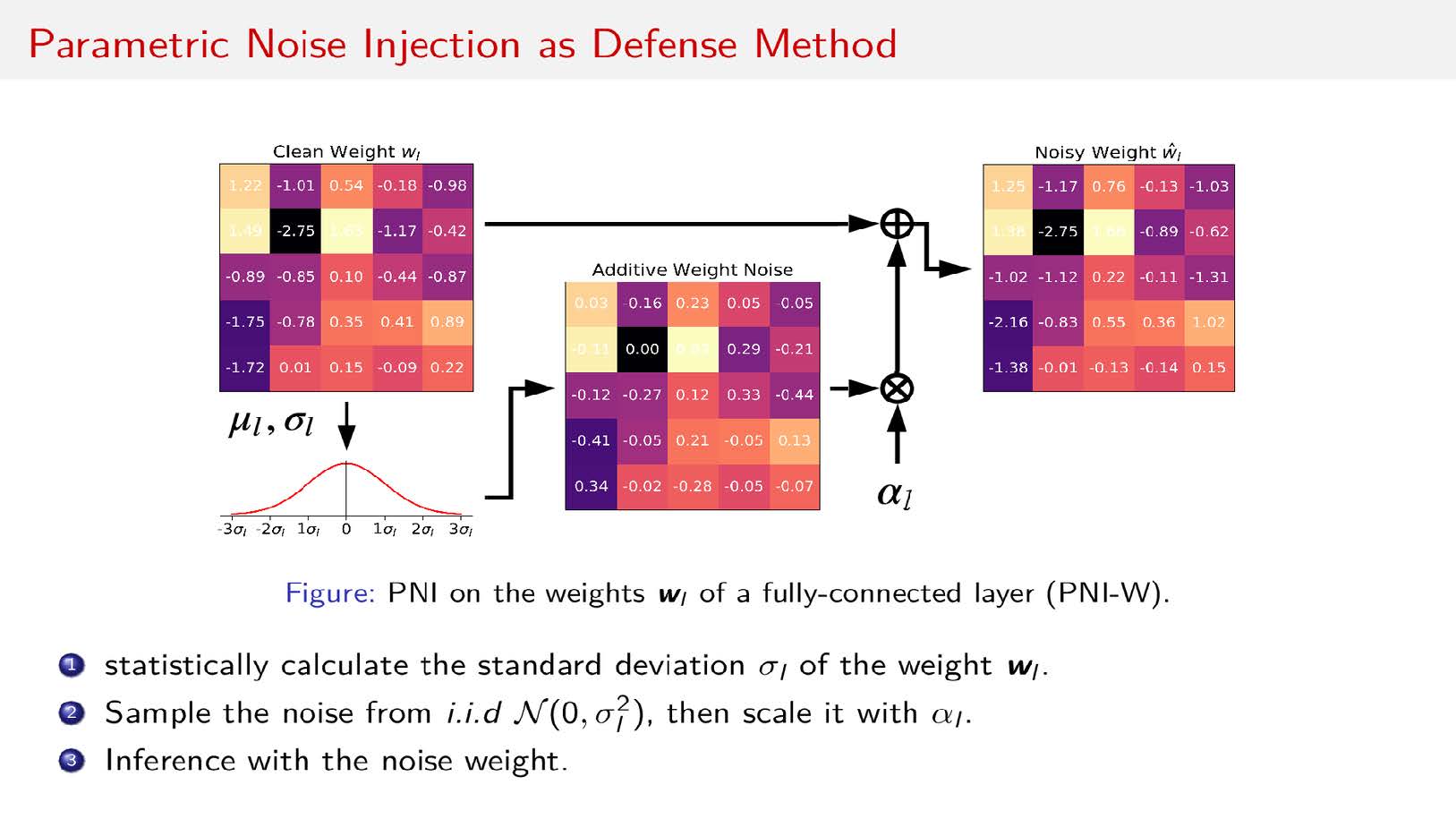

The state-of-the-art Deep Neural Network (DNN) has achieved tremendous achievements in many real-word cognitive applications, such as image/speech recognition, object detection, natural language processing, etc. Despite its remarkable success, recently extensive theoretical studies show that DNNs are highly vulnerable to attacks incurred by adversarial example–a type of malicious inputs crafted by adding small and often imperceptible perturbations to legal inputs. Such adversarial example can mislead the decision of a DNN model to any target incorrect label with very high confidence, while still appearing recognizable to human audio-visual system. Such security of deep learning systems have been emerged as a major concern in DNN-powered applications, especially in safety and security sensitive driverless cars or UAVs, etc. Our research has been focused on developing new methods to improve the robustness of DNN against adversarial example attack

Selected Related publications:

- [CVPR’19] Zhezhi He*, Adnan Siraj Rakin* and Deliang Fan, “Parametric Noise Injection: Trainable Randomness to Improve Deep Neural Network Robustness against Adversarial Attack,” Conference on Computer Vision and Pattern Recognition (CVPR), June 16-20, 2019, Long Beach, CA, USA (* The first two authors contributed equally [pdf] [code in GitHub]

- [CVOPS’19] Yifan Ding, Liqiang Wang, Huan Zhang, Jinfeng Yi, Deliang Fan, and Boqing Gong, “Defending Against Adversarial Attacks Using Random Forests,” Workshop on The Bright and Dark Sides of Computer Vision: Challenges and Opportunities for Privacy and Security, June 16-20, 2019, Long Beach, CA, USA [pdf]

- [GLSVLSI’20] Adnan Siraj Rakin, Zhezhi He, Li Yang, Yanzhi Wang, Liqiang Wang, Deliang Fan, “Robust Sparse Regularization: Simultaneously Optimizing Neural Network Robustness and Compactness”, 30th edition of the ACM Great Lakes Symposium on VLSI (GLSVLSI), September 7-9, 2020 (invited) [pdf]

- [ISVLSI’19] Adnan Siraj Rakin and Deliang Fan, “Defense-Net: Defend Against a Wide Range of Adversarial Attacks through Adversarial Detector,” IEEE Computer Society Annual Symposium on VLSI, 15 – 17 July 2019, Miami, Florida, USA

- Adnan Siraj Rakin, Jinfeng Yi, Boqing Gong, Deliang Fan, “Defend Deep Neural Networks Against Adversarial Examples via Fixed and Dynamic Quantized Activation Functions” arXiv:1807.06714, July, 2018

- Adnan Siraj Rakin, Zhezhi He, Boqing Gong, Deliang Fan, “Blind Pre-Processing: A Robust Defense Method Against Adversarial Examples” arXiv:1802.01549 Feb, 2018